Apache Pinot is a high-performance database engineered to serve analytical queries with extremely high concurrency, boasting latencies as low as tens of milliseconds. It excels at ingesting streaming data from sources like Apache Kafka and Apache Pulsar and is optimized for real-time, user-facing analytics applications.

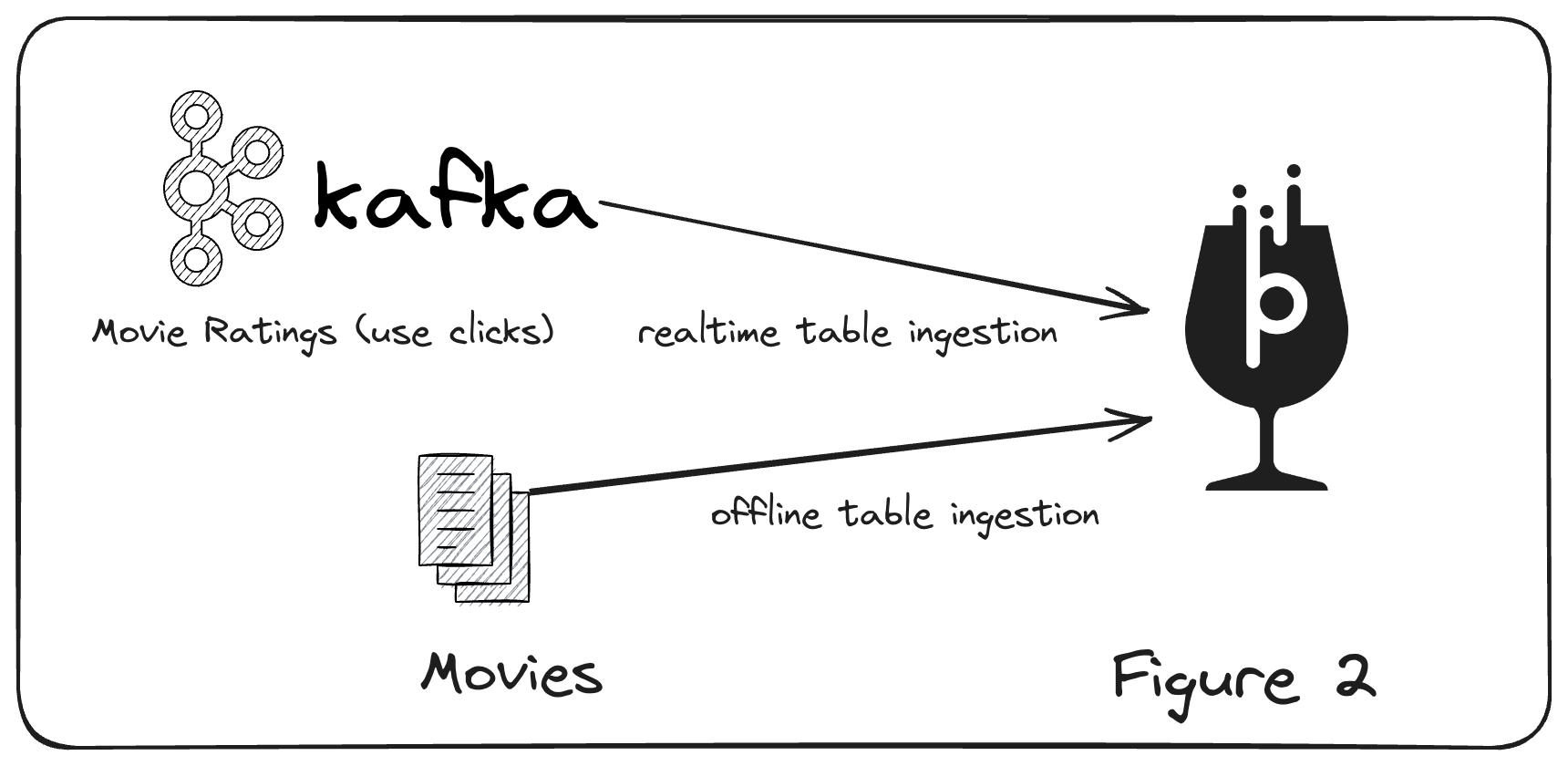

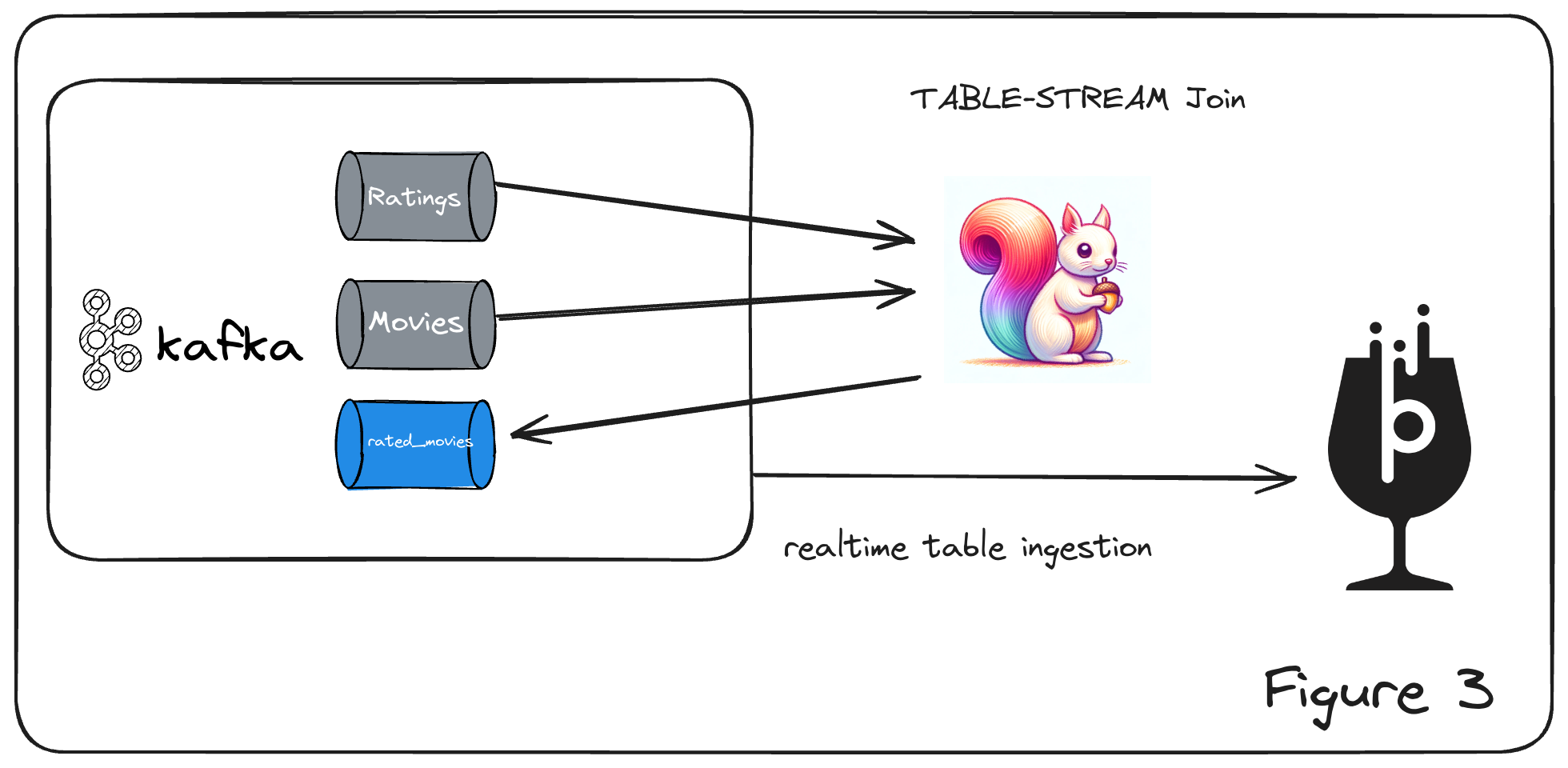

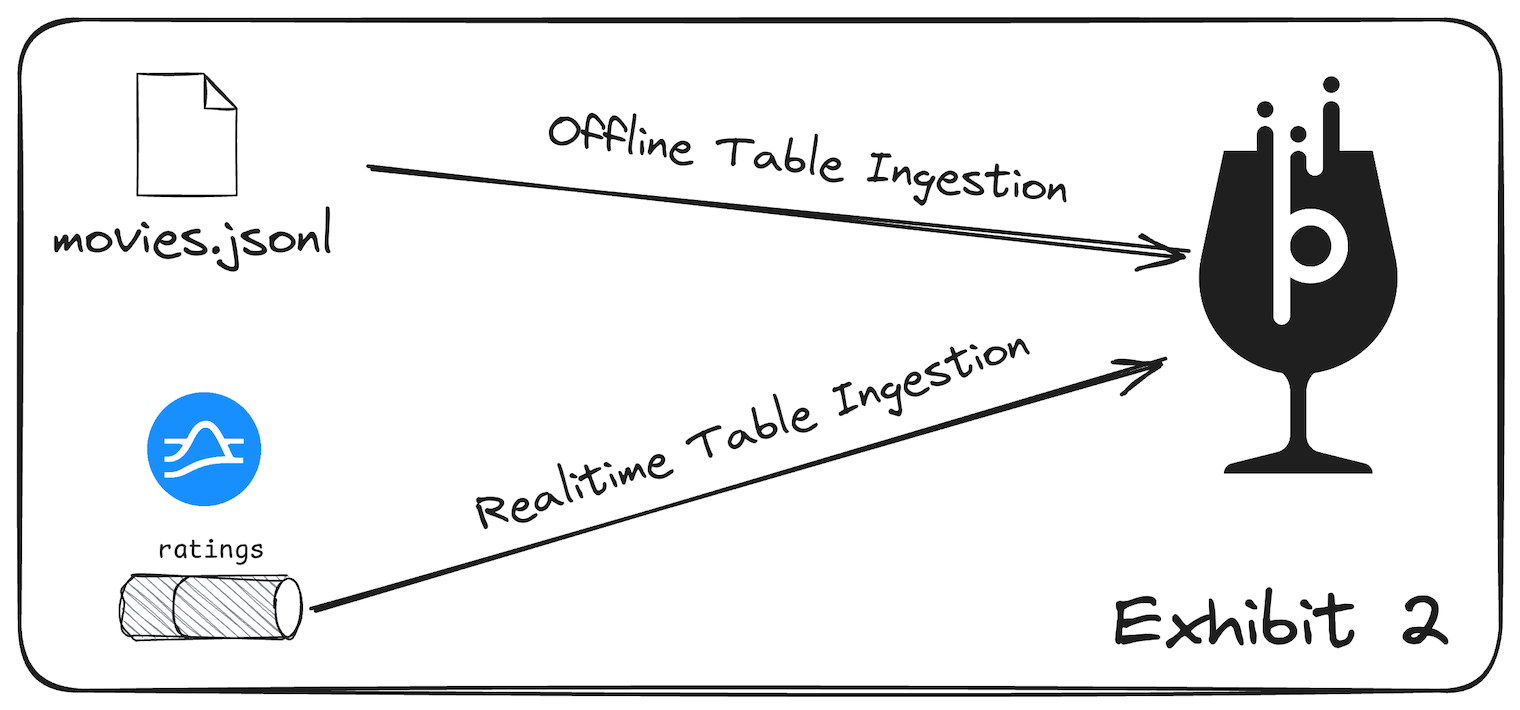

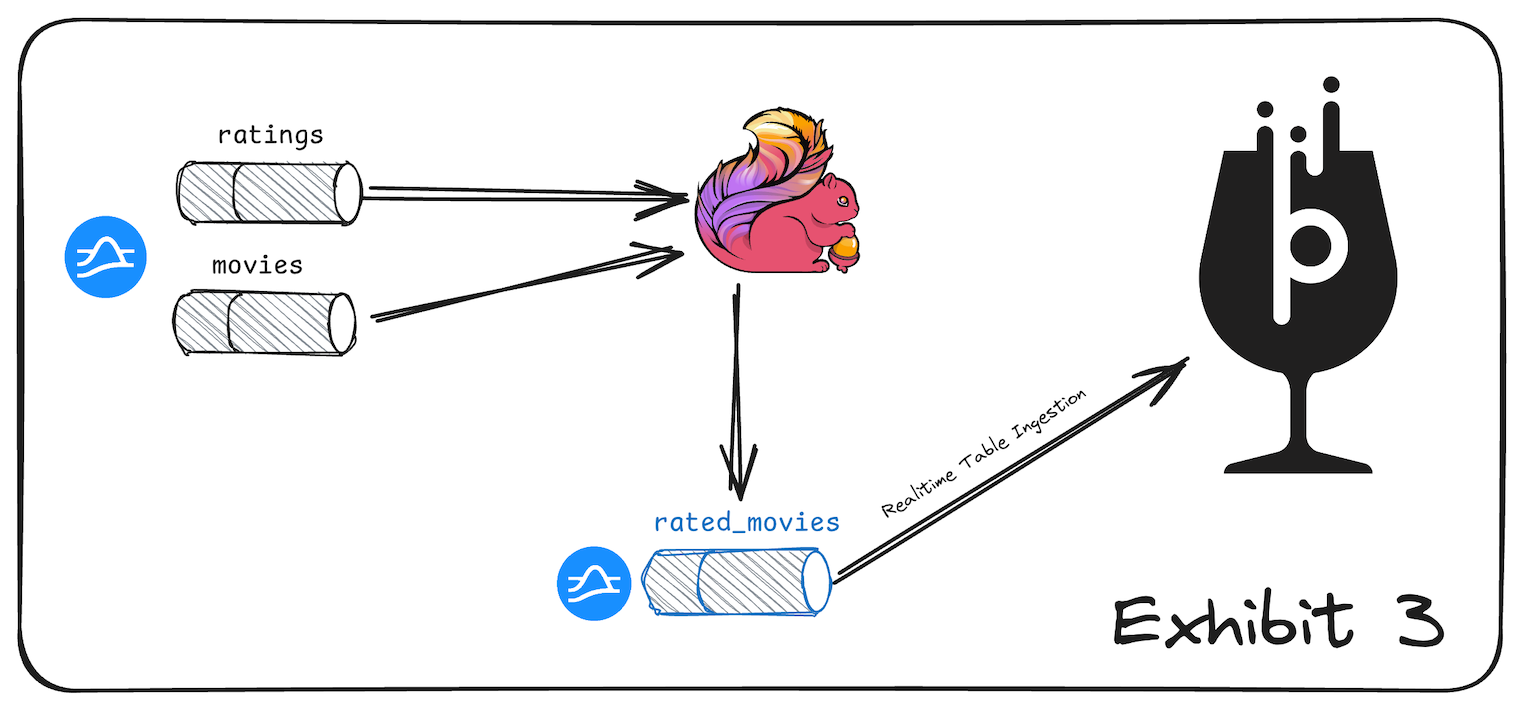

In this repo, we will explore the architectures of Apache Kafka, Apache Pulsar, Apache Flink, and Apache Pinot. We will run local clusters of each system, studying the role each plays in a real-time analytics pipeline. Developers will begin by ingesting static data into Pinot and querying it. Not content to stop there, we will add a streaming data source in Kafka or Pulsar, and ingest that into Pinot as well, showing how both data sources can work together to enrich an application. We will then examine which analytics operations belong in the analytics data store (Pinot) and which ones should be computed before ingestion. These operations will be implemented in Apache Flink. Having put all three technologies to use on your own in hands-on exercises, you will leave prepared to begin exploring them together for your own real-time, user-facing analytics applications.

At the successful completion of this training, you will be able to:

-

List the essential components of Pinot, Kafka, Pulsar and Flink.

-

Explain the architecture of Apache Pinot and its integration with Apache Kafka, Apache Pulsar and Apache Flink.

-

Form an opinion about the proper role of Kafka, Flink, and Pinot in a real-time analytics stack.

-

Implement basic stream processing tasks with Apache Flink.

-

Create a table in Pinot, including schema definition and table configuration.

-

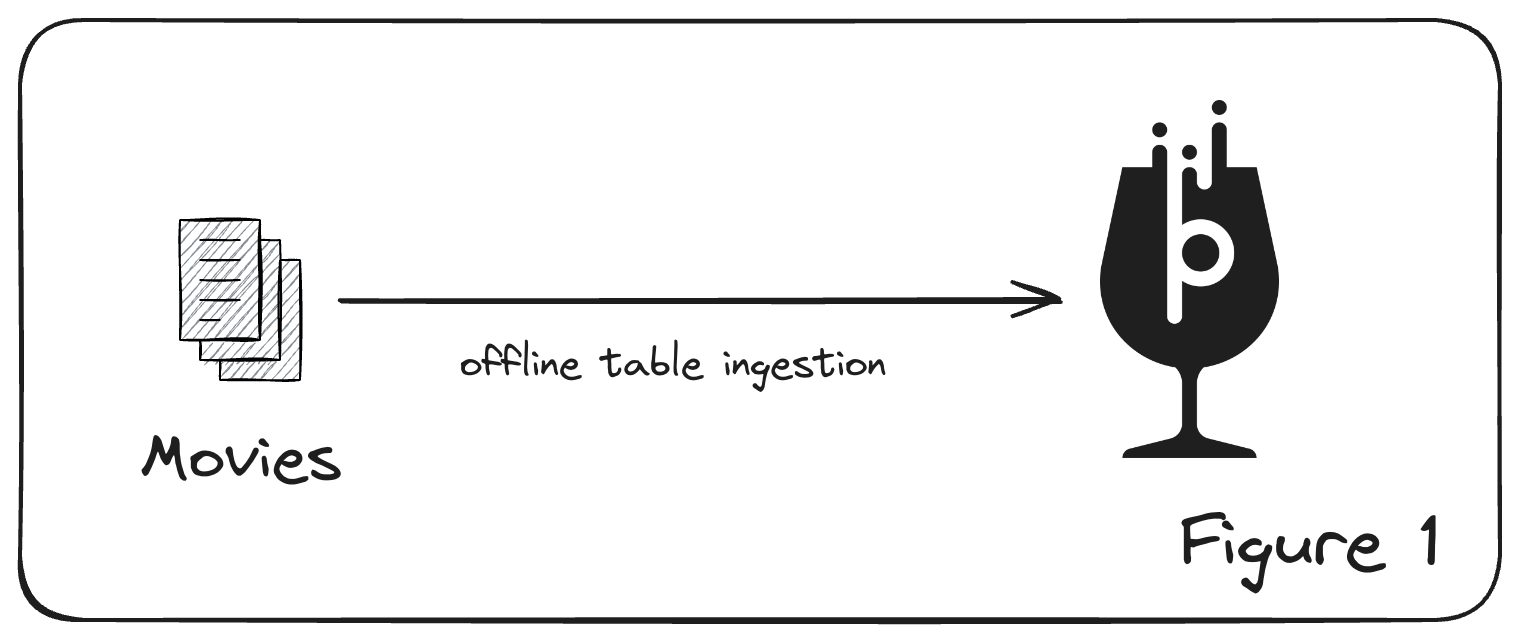

Ingest batch data into an offline table and streaming data from a Kafka topic into a real-time table.

-

Use the Pinot UI to monitor and observe your Pinot cluster.

-

Use the Pinot Query Console

To participate in this workshop, you will need the following:

-

Docker Desktop: We will use Docker to run Pinot, Kafka, and Flink locally. If you need to install it, please, download Docker Desktop and follow the instructions to install it at https://www.docker.com/get-started/ or use OrbStack https://orbstack.dev.

-

Resources: Pinot works well in Docker but is not designed as a desktop solution. Running it locally requires a minimum of 8GB of Memory and 10GB of disk space.

To ensure you’re fully prepared for the workshop, please follow these guidelines:

-

Version Control:

-

Check out the latest version of the workshop repository to access all necessary materials and scripts.

git clone https://github.com/gAmUssA/uncorking-analytics-with-pinot-kafka-flink.git cd uncorking-analytics-with-pinot-kafka-flink

-

-

Docker:

-

Install Docker if it isn’t already installed on your system. Download it from https://www.docker.com/products/docker-desktop.

-

Before the workshop begins, pull the necessary Docker images to ensure you have the latest versions:

make pull_images

-

-

Integrated Development Environment (IDE):

-

Install Visual Studio Code (VSCode) to edit and view the workshop materials comfortably. Download VSCode from https://code.visualstudio.com/.

-

Add the AsciiDoc extension from the Visual Studio Code marketplace to enhance your experience with AsciiDoc formatted documents.

-

-

Validate Setup:

-

Before diving into the workshop exercises, verify that all Docker containers needed for the workshop are running correctly:

docker ps

-

This command helps confirm that there are no unforeseen issues with the Docker containers, ensuring a smooth operation during the workshop.

-

-

Using VSCode:

-

Open the workshop directory in VSCode to access and edit files easily. Use the AsciiDoc extension to view the formatted documents and instructions:

code .

-

-

Docker Issues:

-

If Docker containers fail to start or crash, use the following command to inspect the logs and identify potential issues:

docker logs <container_name>

-

This can help in diagnosing problems with specific services.

-

-

Network Issues:

-

Ensure no applications are blocking the required ports. If ports are in use or blocked, reconfigure the services to use alternative ports or stop the conflicting applications.

-

-

Removing Docker Containers:

-

To clean up after the workshop, you might want to remove the Docker containers used during the session to free up resources:

make stop_containers

-

Additionally, prune unused Docker images and volumes to recover disk space:

docker system prune -a docker volume prune

-

These steps and tips are designed to prepare you thoroughly for the workshop and to help address common issues that might arise, ensuring a focused and productive learning environment.

The practical exercises of this workshop are divided into three distinct parts, each designed to give you hands-on experience with Apache Pinot’s capabilities in different scenarios. Below are the details and objectives for each part: